From Raw Data to Model-Ready: Advanced Feature Engineering in Python

Author: Kristoffer Dave Tabong | July 2, 2025

In machine learning, models are only as good as the features they learn from. Raw data, straight from the source, is rarely ready to use. That's where feature engineering becomes critical—transforming unstructured input into signal-rich features that boost model performance.

With the right tools—Scikit-learn, Pandas, and NumPy—feature engineering becomes not just powerful, but also repeatable, scalable, and ready for production.

This guide walks through building a complete feature engineering pipeline using:

Pipelinefor orchestrationColumnTransformerfor selective transformationsNumPyfor optimized computation

Key Tools for Structured Feature Engineering

| Tool | Purpose |

|---|---|

Pipeline |

Chain preprocessing and modeling steps into a single executable object |

ColumnTransformer |

Apply different transformations to different subsets of columns |

NumPy |

Ensure performance, storage efficiency, and compatibility with ML models |

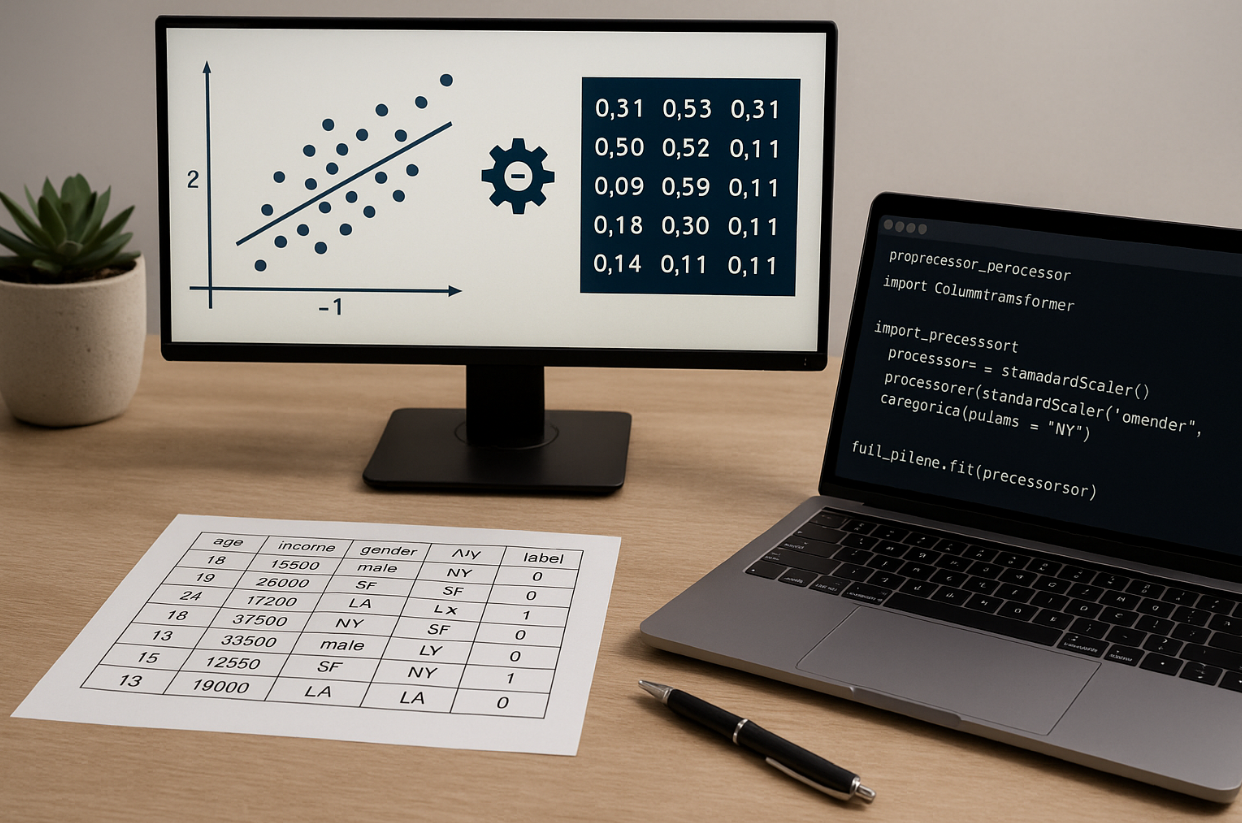

Step 1: Simulating the Dataset

We’ll start by creating a mock dataset that includes both numerical and categorical features. This is useful for testing machine learning pipelines, feature engineering, or preprocessing steps in a realistic way.

Python Code:

import numpy as np

import pandas as pd

# Create a simulated dataset

df = pd.DataFrame({

'age': np.random.randint(18, 70, 20),

'income': np.random.randint(30000, 120000, 20),

'gender': np.random.choice(['male', 'female'], 20),

'city': np.random.choice(['NY', 'SF', 'LA'], 20),

'label': np.random.randint(0, 2, 20)

})

This dataset contains:

- age – a random integer between 18 and 70

- income – a random integer representing annual income

- gender – randomly chosen between

'male'and'female' - city – randomly selected from

'NY','SF', and'LA' - label – a binary value (0 or 1) representing the target variable

This step lays the foundation for modeling or preprocessing workflows, especially for experimenting with pipelines that involve mixed data types.

Step 2: Preparing the Data

In this step, we separate the target variable from the input features and classify the columns by data type. This is a critical preparation before applying transformations or training a model.

Python Code:

# Separate features and label

X = df.drop(columns='label')

y = df['label']

# Identify column types

numeric_columns = ['age', 'income']

categorical_columns = ['gender', 'city']

What it does:

Xstores the feature set by dropping the'label'column from the original DataFrame.ystores the target variable for classification.numeric_columnslists the continuous features that will later be scaled or normalized.categorical_columnscontains string-based features that will require encoding.

This structured separation allows us to build preprocessing pipelines tailored to each data type.

Step 3: Creating Feature Pipelines

Now that we've separated the feature types, we can define preprocessing steps tailored to each. We'll use scikit-learn's Pipeline and ColumnTransformer to streamline and modularize transformations.

Python Code:

from sklearn.pipeline import Pipeline

from sklearn.compose import ColumnTransformer

from sklearn.preprocessing import StandardScaler, OneHotEncoder

# Define numeric preprocessing pipeline

numeric_pipeline = Pipeline([

('scale', StandardScaler())

])

# Define categorical preprocessing pipeline

categorical_pipeline = Pipeline([

('encode', OneHotEncoder(handle_unknown='ignore'))

])

# Combine both pipelines into a single preprocessor

preprocessor = ColumnTransformer([

('num', numeric_pipeline, numeric_columns),

('cat', categorical_pipeline, categorical_columns)

])

What it does:

numeric_pipeline: AppliesStandardScalerto standardize numeric features (mean = 0, std = 1).categorical_pipeline: AppliesOneHotEncoderto convert categorical variables into binary columns. Thehandle_unknown='ignore'parameter avoids issues with unseen values during inference.preprocessor: UsesColumnTransformerto apply the correct pipeline to each column type in parallel.

This design ensures clean, consistent preprocessing—numeric and categorical features are handled appropriately and efficiently, setting the stage for model training.

Step 4: Verifying Transformed Features

Before sending preprocessed data into a model, it’s important to inspect the transformed features to ensure that encoding and scaling behave as expected.

Python Code:

# Apply preprocessing transformations

X_transformed = preprocessor.fit_transform(X)

# Retrieve transformed column names

columns_out = preprocessor.get_feature_names_out()

# Convert the transformed array into a readable DataFrame

X_df_transformed = pd.DataFrame(X_transformed.toarray(), columns=columns_out)

# Preview the first few rows

print(X_df_transformed.head())

What this step does:

fit_transformapplies the full preprocessing pipeline to the feature matrixX.get_feature_names_outreturns the final column names after transformation (e.g., encoded categories or scaled feature names).pd.DataFrame(...)turns the NumPy array into a labeled DataFrame for easier inspection.

This step is essential to confirm that scaling and encoding have been applied correctly, and that no unexpected behavior has crept into your features.

Step 5: End-to-End Pipeline with a Model

With preprocessing steps defined, we can now add a classifier to the pipeline. This creates a single, streamlined workflow that handles both feature transformation and model training—ensuring consistency across training and inference.

Python Code:

from sklearn.ensemble import RandomForestClassifier

# Combine preprocessing and model into a unified pipeline

full_pipeline = Pipeline([

('features', preprocessor),

('model', RandomForestClassifier())

])

# Fit the pipeline on the full dataset

full_pipeline.fit(X, y)

# Make predictions

predictions = full_pipeline.predict(X)

Why this matters:

- Modularity: Every preprocessing step and model component is encapsulated in a single object.

- Consistency: Ensures that the same transformations used during training are applied during inference.

- Deployment-ready: This unified pipeline can be saved and reused in production with no extra preprocessing code.

Your pipeline is now fully operational—from raw data to predictions—with no risk of mismatched transformations between environments.

Why Feature Engineering Pipelines Matter

Building feature engineering into a pipeline offers several practical advantages that go beyond just code organization:

- Consistency between development, testing, and deployment — the same transformations are applied every time, reducing bugs and mismatches.

- Modularity — easily add, swap, or tune both transformations and models without rewriting boilerplate logic.

- Cleaner codebase — simplifies experimentation and reduces repetitive manual data wrangling.

Feature engineering isn’t just about wrangling data—it’s about setting your models up for success. With tools like Scikit-learn Pipelines, ColumnTransformer, and NumPy, you can build workflows that are robust, reusable, and ready for production.

This approach isn’t just for toy datasets—it reflects how modern machine learning systems are engineered at scale in the real world.

Smart preprocessing isn’t a luxury—it’s table stakes for building reliable, maintainable, and high-performing models.

Contact Us

Get in Touch

Feel free to reach out if you have any questions or concerns. We're happy to help!